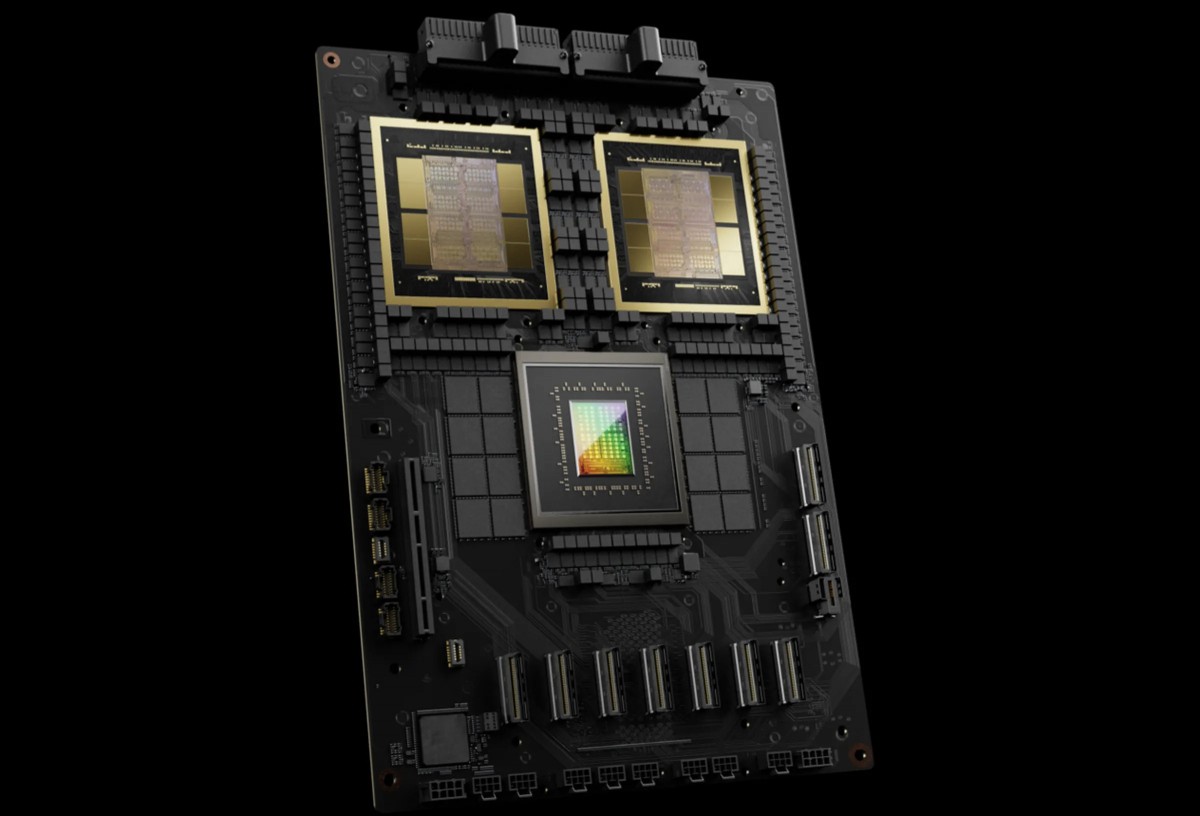

NVIDIA B200

Massive Performance Leap Over Previous Generation

- 3X training performance vs DGX H100

- 15X inference performance vs DGX H100 (per GPU comparison)

- 72 petaFLOPS training / 144 petaFLOPS inference per system

Particularly dominant for real-time LLM inference: 58 output tokens/sec/GPU vs 3.5 for H100 - Particularly dominant for real-time LLM inference: 58 output tokens/sec/GPU vs 3.5 for H100

Unprecedented Memory Capacity and Bandwidth

- 1.4TB total GPU memoryacross 8 GPUs (180GB per GPU)

- 64TB/s memory bandwidth

- This massive memory footprint enables handling the largest enterprise AI workloads, including trillion-parameter models and multi-modal applications that would struggle on previous generations

Unified Platform for Complete AI Lifecycle

Single system handles training, fine-tuning, AND inference without needing separate infrastructure. This consolidation reduces:

- Data movement between systems

- Infrastructure complexity and cost

- Time-to-production for AI models

- Operational overhead

Use Cases

Large Model Inference

Run massive models with predictable latency. Optimize for throughput, batch size, and performance per watt.

Generative AI applications for text, image, and audio.

Scaling ML infrastructure as your customer base grows.