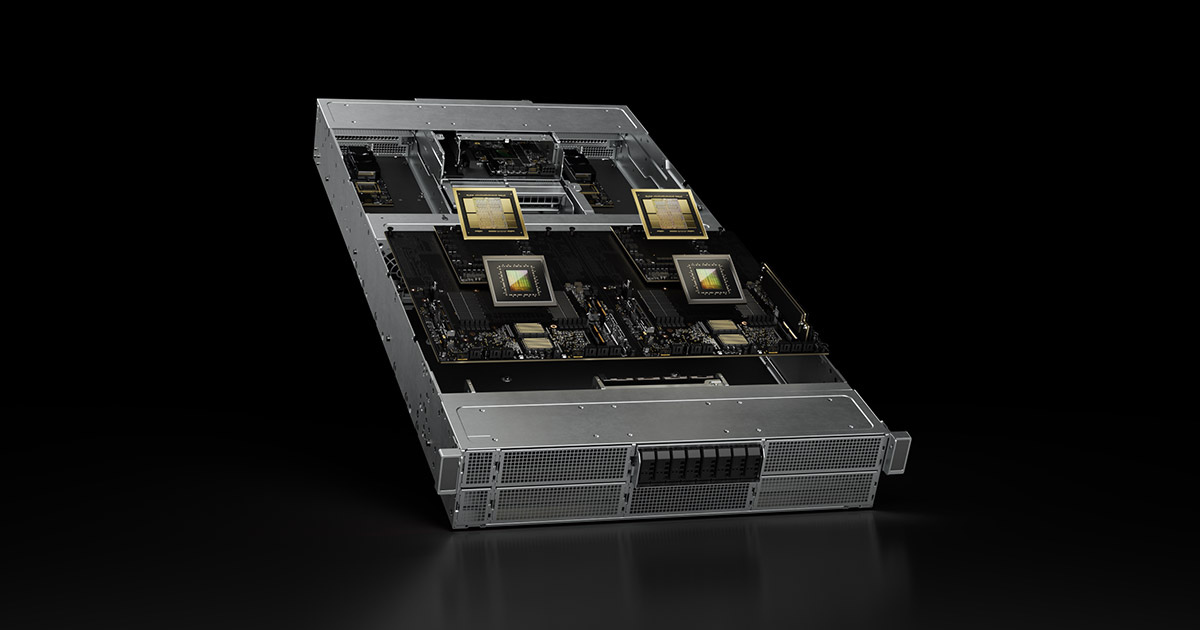

NVIDIA H200

Rack-Scale Architecture with Massive 72-GPU NVLink Domain

- 130 TB/s NVLink aggregate bandwidth across 72 Blackwell GPUs

- Acts as a single massive GPU- the largest NVLink domain ever offered

- 13.4TB total HBM3E memory (186GB per GPU × 72)

- Eliminates traditional multi-node communication bottlenecks for trillion-parameter models

30X Real-Time LLM Inference Performance vs H100

- 1.44 exaFLOPS FP4 sparse performance per rack (720 PFLOPS dense)

- Second-generation Transformer Engine with FP4 microscaling

- Optimized for real-time inference on trillion-parameter models (50ms token-to-token latency)

- 4X faster training at massive scale vs H100 clusters

Integrated CPU+GPU Architecture with Extreme Energy Efficiency

- 36 Grace CPUs + 72 Blackwell GPUs in rack-scale liquid-cooled design

- 25X better performance-per-watt vs air-cooled H100 infrastructure

- 2,592 Arm Neoverse V2 CPU cores with 17TB LPDDR5X memory

- NVLink-C2C interconnect provides 900GB/s CPU-to-GPU bandwidth per superchip

Cas d'utilisation

Inférence de grands modèles

Exécutez des modèles volumineux avec une latence prévisible. Optimisez le débit, la taille des lots et les performances par watt.

Applications d'IA génératives pour le texte, l'image et l'audio.

Adaptation de l'infrastructure ML à mesure que votre clientèle s'agrandit.