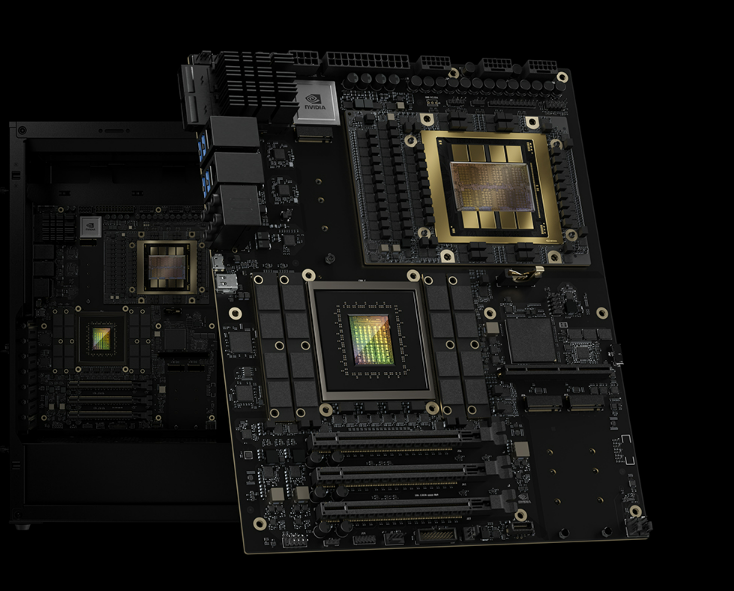

NVIDIA H200

Purpose-Built for AI Reasoning with 50X AI Factory Output Improvement

- 10X boost in user responsiveness (tokens per second per user)

- 5X improvement in throughput (TPS per megawatt)

- Combined 50X overall AI factory output vs Hopper-based platforms

- Optimized for test-time scaling inference (DeepSeek R1, o1, o3-style reasoning models)

Blackwell Ultra GPUs with Enhanced Reasoning Performance

- 2X attention performance vs standard Blackwell B200 GPUs

- 1.5X more dense FP4 Tensor Core compute (1,080 PFLOPS dense vs 720 for GB200)

- 288GB HBM3E per GPU (50% more than B200's 192GB)

- 20.7TB total HBM3E across 72 GPUs for massive batch sizes

Integrated 800Gb/s Networking for Multi-Rack Scale-Out

- ConnectX-8 SuperNICs with 800Gb/s per GPU (2X vs GB200's 400Gb/s)

- Enables efficient multi-rack scaling with NVIDIA Quantum-X800 InfiniBand or Spectrum-X Ethernet

- 130TB/s NVLink bandwidth within the rack (same as GB200)

- Designed for hyperscale AI factory deployments with thousands of GPUs

Cas d'utilisation

Inférence de grands modèles

Exécutez des modèles volumineux avec une latence prévisible. Optimisez le débit, la taille des lots et les performances par watt.

Applications d'IA génératives pour le texte, l'image et l'audio.

Adaptation de l'infrastructure ML à mesure que votre clientèle s'agrandit.